This project made up the first semester of a year-long project, which culminated in creating a new treatment for visual vertigo. On this page, I'll detail as briefly as possible what the project was about and what I learnt from it, including only the main areas. Click here for the second semester project which continues from this.

Virtual Eye Test

The first semester was about getting to grips with virtual reality hardware and software. We were provided with an Oculus Rift DK2, which we were supposed to use for the project. The Oculus Rift DK2 of course requires a decently specced PC to use it with, and for the first semester we had extremely limited access to such PCs. With this realization, we instead moved to testing out Google Cardboard - a budget VR solution. We wanted to test how well it worked and assess its suitability for patient treatment in virtual reality. To do this, a virtual reality eye test was produced using the Unity game engine.

Google Cardboard is fine for a budget VR solution, however it has plenty of issues as you may expect. Something you may not expect is that the quality of the experience varies wildly between devices used with the Cardboard. The main devices we used in testing were the Samsung S6 Edge+ and Samsung Note 3 - these are flagship Android devices from only two years apart, and even there we found a substantial difference in quality. So how did they differ? In short, the tracking experience is much better with a newer phone. The tracking was not what I would call responsive on any phone we tested, but it was clearly much more responsive on the Edge+. The tracking quality is very important in VR, as poor tracking can contribute to simulator sickness.

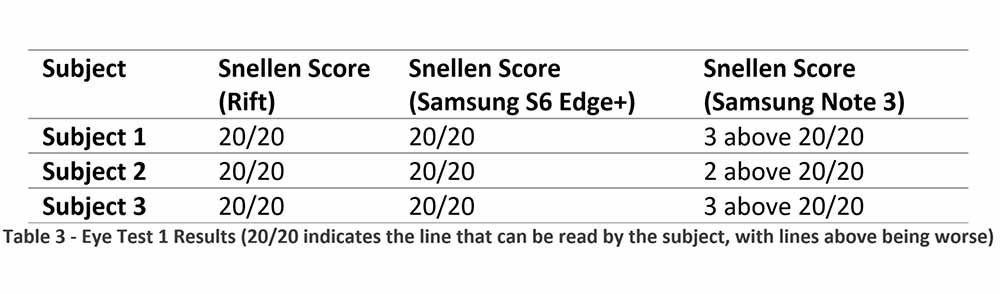

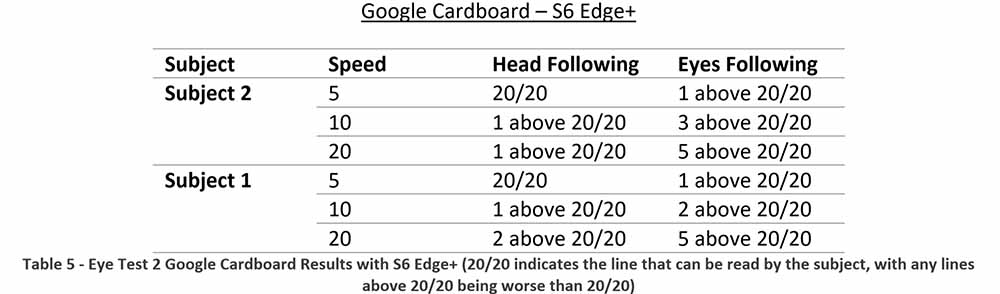

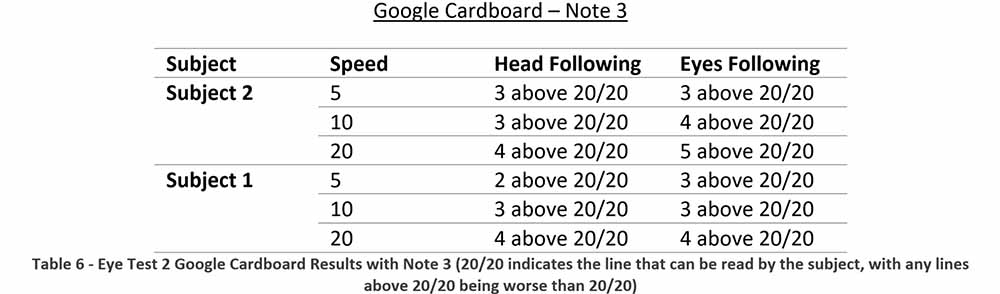

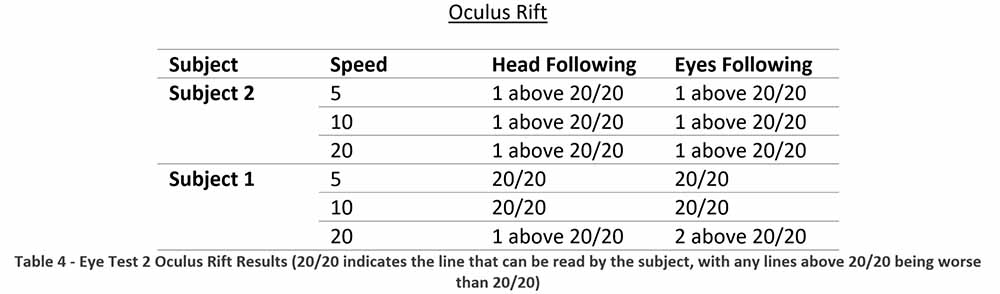

The virtual eye test was a great way to test the devices, and we did it in two ways. The first was very standard - a user would look at a stationary Snellen chart in virtual reality and try to read off letters, and their score would be recorded. This is a great test for clarity of vision. As I mentioned, there are also important issues with tracking. We tested this by making the Snellen chart move to the left and right, and we would record how well a user could read off letters in that situation. For the moving chart, two scenarios were tested. The first was a user keeping their head forward and looking at the chart by only moving their eyes, and the second was keeping the eyes still and looking with the head - this was a great test for tracking. We compared these results with the user's score reading from a Snellen chart in real life at equal distance.

Results

The above tables have units of m/s for speed, and any references to the Rift refer to the Rift DK2. As can be seen, there are quite large differences between some of the devices.

Though this project began slowly due to lack of available resources, it ended well. It was a fun test to design and carry out, though we had limited people to test on. Though results for the Edge+ phone approach those of the Rift DK2 for the stationary chart, it's important to note that the DK2 was more comfortable to use due to its superior tracking, and this can be seen in the results for the moving chart. Another interesting point is that the Note 3 and Rift DK2 use the exact same 1080p display, compared to the 1440p display in the Edge+; this outlines just how significant the differences in tracking and optics were between the phone with a cheap Google Cardboard kit and the Rift DK2. There are many more points that can be discussed, but I said I would keep this brief.

At the end of this project, I produced a 10,300 word report for assessment. I was also assessed on my lab book and a Viva, which essentially means an interview.

Summary of learned or improved skills:

- Photoshop

- Word

- Unity game engine

- C#

- Physics